While plagiarism has always been a major problem for the music industry, it was rarely catastrophic. But now that artificial intelligence (AI) tools are taking over, the problem has only gotten worse. While AI is extremely useful in a number of different industries, it isn’t all positive. For one, it’s absolutely inundating streaming platforms like Spotify with uninteresting AI music. Here’s why synthetic music is quickly becoming a big challenge for the music industry and whether it is even legal to create such content.

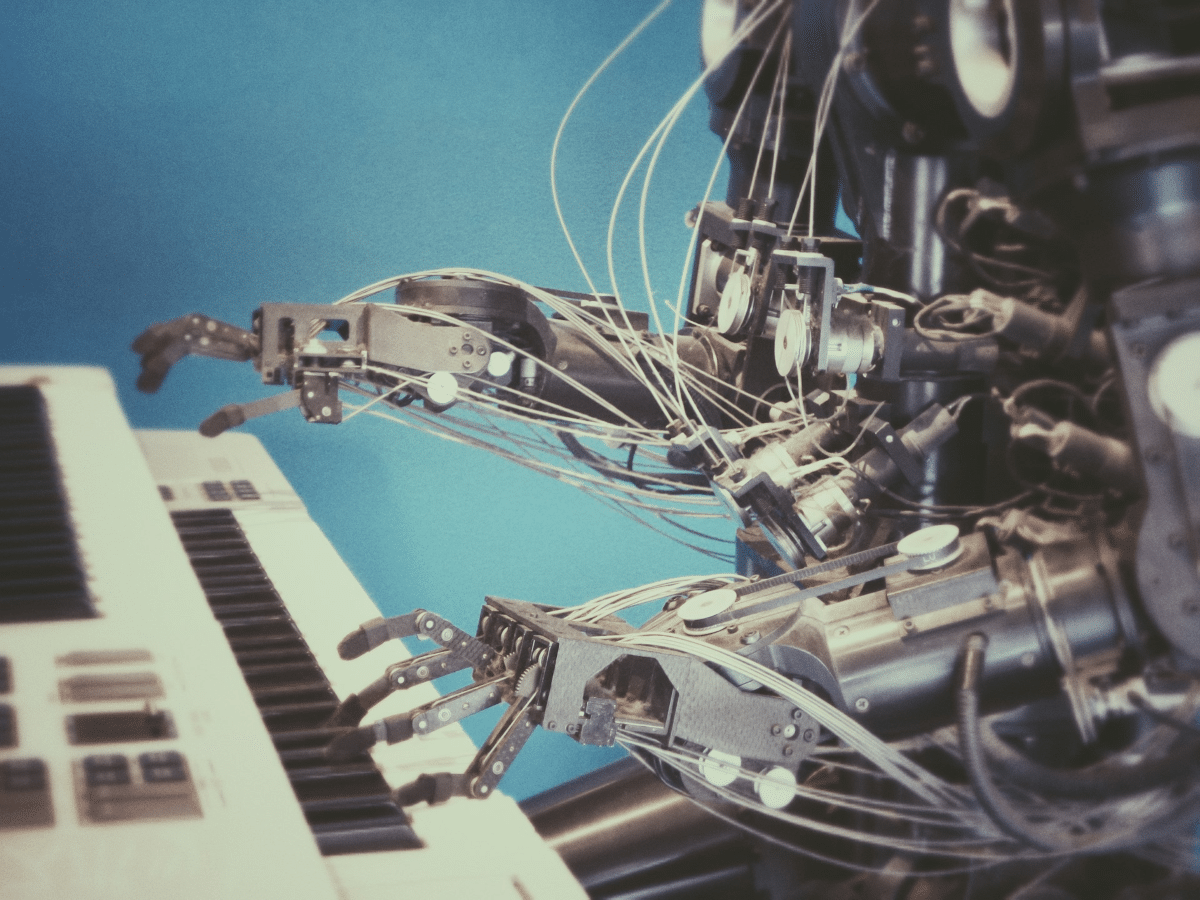

For the uninitiated, AI music is composed (or rather generated) using AI tools. Simply put, instead of a human creating music with the help of instruments, it is solely created using technology similar to the tech that powers AI chatbots.

Notably, AI music is different from AI playlists which is something that streaming platforms are experimenting with. For instance, earlier this year, Spotify announced an AI playlist tool that would help users curate a personalized playlist based on the artists, tracks, and genres that AI would recommend.

How Is AI Music Created?

Thanks to multiple platforms like Loudly, Canva, as well as OpenAI, it is very easy to create AI music and requires only a few minutes – or even less. These platforms analyze musical data and learn patterns and styles to generate unique music based on their “learnings” from existing music.

🔮 By 2030 a majority of “popular” music will be fully generated by AI, including “singing” with acoustic and electronic instruments. People will subscribe to AI producing in the-style-of old and new songs. By 2040 there will be 75% less musicians. It all stated in 2023. pic.twitter.com/cDBfgjsXLh

— Brian Roemmele (@BrianRoemmele) January 9, 2023

However, there are multiple issues associated with AI music. Firstly, many believe that since it is created by algorithms fed on existing music, it is essentially copyright infringement (unless the original music was licensed). This also means that all new AI music will likely not take any innovative steps forward. If there is little to no innovation in the music industry, listeners will soon get bored and lose interest.

On a related note, as AI music leads to lower royalty earnings for human composers, it could hamper the music industry in the long term by making it too difficult to turn a profit. Some experts even fear that AI music can be used to manipulate listeners’ emotions.

AI music is a challenge for the music industry which includes both the composers as well as listeners. In his op-ed last year, UMG executive vice president Michael Nash said, “Often, it simply produces a flood of imitations — diluting the market, making original creations harder to find, and violating artists’ legal rights to compensation from their work.”

Mitch Glazier, the head of the Recording Industry Association of America echoes similar views and said, ‘I think no matter what kind of content you produce, there’s a strong belief that you’re not allowed to copy it, create an AI model from it, and then produce new output that competes with the original that you trained on.”

To be sure, creating music using AI is not illegal (assuming the music it was trained on was licensed). However, producing music in someone else’s voice could make you liable for copyright infringement and impersonation.

Streaming Platforms Allow AI Music With Guardrails

Leading streaming platforms like YouTube and Spotify allow AI music with some guardrails. For instance, according to a Spotify spokesperson, the company “does not have a policy against artists creating content using autotune or AI tools, as long as the content does not violate our other policies, including our deceptive content policy, which prohibits impersonation.”

Spotify’s deceptive content policy states that creators should not replicate “the same name, image, and/or description as another existing creator.” Also, they should not pose as another person or a brand to mislead people. The platform however does not let AI models train on its content.

YouTube also allows AI-generated content with some exceptions – for instance, it prohibits deepfakes which are outright illegal. Also, it says that creators would need to disclose any realistic-looking synthetic content that’s uploaded on the platform.

Can You Call Out Synthetic Music From Real Music?

It is not easy to pick out AI music from real music in many instances. However, just like other AI-generated content, there are ways to spot if the music was created synthetically using AI. Firstly, the audio quality of AI music might be low as compared to original music. Also, like other AI-generated content, there can be strange inconsistencies in AI music.

Since AI is still a long way from reaching artificial general intelligence, the models are as good as the data that they are fed on and cannot think critically. This can also show up in the music that’s generated from AI and it might lack emotions that music generated by a human can capture.

AI Music Can be a Side Gig

That said, creating AI music that complies with the norms of streaming platforms can be a good side gig. Many users are uploading AI music on streaming platforms and some are even earning hundreds of dollars daily. However, it is not that simple and you cannot upload music on streamers like Spotify directly and would need to go through a distributor or have a label before uploading. If you just want to play around and share some of your creations, you can try free platforms like SoundCloud which allow you to upload synthetic music directly.

A first-of-its-kind federal indictment accusing a man of making over $10 million by falsely boosting streaming numbers for AI-generated music demonstrates another threat to artists and a need for fraud-prevention vigilance by platforms. https://t.co/G6nLA80y5E

— Bloomberg Law (@BLaw) September 12, 2024

Notably, Federal authorities recently arrested a North Carolina resident Michael Smith who they say was involved in a “brazen fraud.” Smith allegedly created hundreds of thousands of songs using AI and then streamed them continuously to earn royalty payments of $10 million.

In his statement, FBI Acting Assistant Director Christie M. Curtis said, “The FBI remains dedicated to plucking out those who manipulate advanced technology to receive illicit profits and infringe on the genuine artistic talent of others.”

AI regulations are still a work in progress in many jurisdictions including the US. Many artists, media houses, and publishers have lashed out at AI companies for scrapping their original content to train their models.

There are calls for invoking copyright laws in AI music also and earlier this year, Tennessee enacted a law protecting musicians’ voices from impersonation using AI – becoming the first US state to enact such a law. According to The American Society of Composers, Authors, and Publishers (ASCAP) CEO Elizabeth Matthews, the state’s Ensuring Likeness Voice and Image Security (ELVIS) Act is “about balancing the very real concerns that artificial intelligence raises for music creators while making room for responsible innovation.”

Going forward, we might see such regulations in more states also. For now, though, creating AI music with guardrails is in the ambit of law.